Machine Vision for Monkeys

Since 2020, as part of my senior postdoc working experience, I have been embedding machine learning approaches into my scientific projects, in order to address three crucial and yet still unresolved issues:

- Being able to identify which particular animal was operating the touchscreen devices at any given time. This was necessary to run standardised but yet individualised training and enrichment protocols (Berger et al 2018, Calapai et al 2022, Cabrera-Moreno et al 2022);

- Being able to autonomously and efficiently extract animals’ location, identity, posture, and behaviour, from video-recordings with only minimal human supervision (ongoing project).

- Being able to track the animals’ gaze while they are playing with touchscreen devices (ongoing project);

Animal identification: a machine-learning, real time, low latency, approach

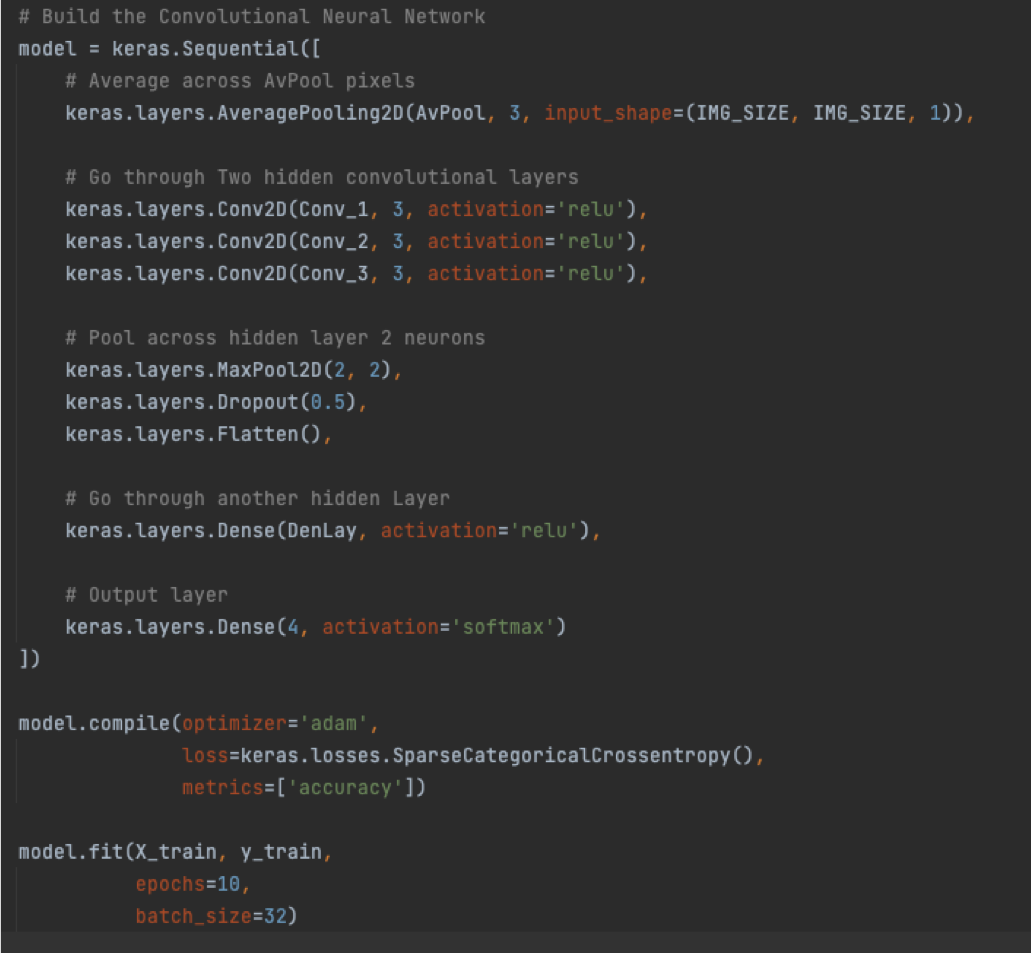

For this purpose I have trained a convolutional neural network with TensorFlow, under python 3.7. The network consisted of 9 layers in total, from input to output: an Average Pooling input layer (6 × 3 pooling size); 3 convolutional layers (3 × 3 kernel, ‘relu’ activation function, with 64, 16, 32 neurons, respectively); 3 pooling layers (MaxPool 2 × 2; Dropout; Flatten); 1 Dense layer (with a ‘relu’ activation function); and a final Dense output layer (with a ‘softmax’ activation function). The network was compiled with an ‘adam’ optimizer, a sparse categorical ‘crossentropy’ function, and ‘accuracy’ as metrics. The fitting was done in 10 epochs and with a batch size of 32. The output layer, representing the animals in each group, contained an additional neuron, here called null, that was trained on pictures triggered by the animals by accident (e.g., with their back).

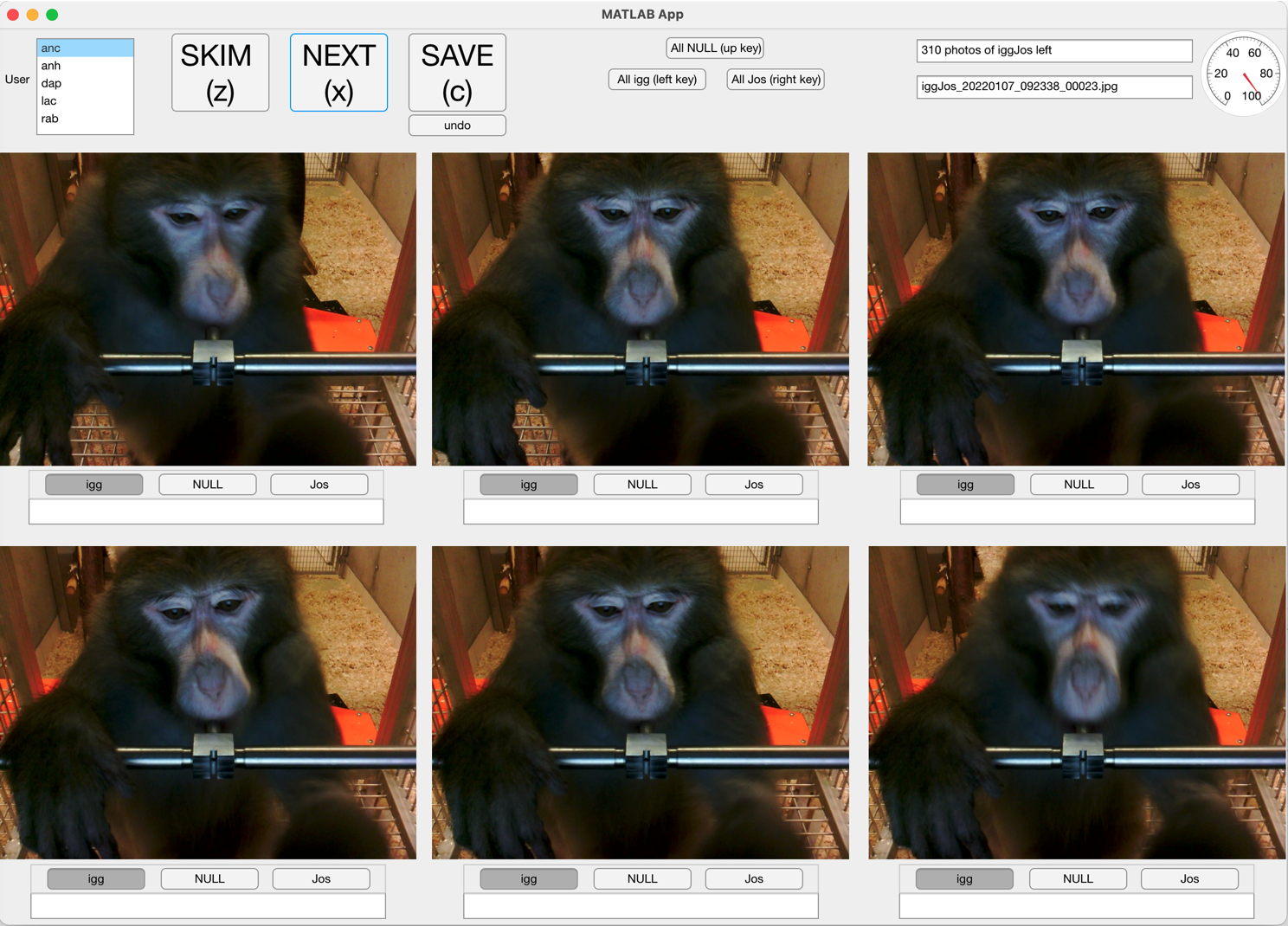

In order to label the 40K images to establish ground truth and evaluate the different networks I have developed a graphical-user-interface (Matlab) that would allow me and my collaborators to label 50 pictures per minute (3K/Hour). From within the interface the user could label images chronologically, as animals tend to interact with the touchscreen in bursts.

The same approach was used with rhesus macaques for a study that is at the moment still in preparation. Overall, between long-tailed and rhesus macaques, this approach was used to identify 11 animals, belonging to 4 different housing groups. As shown in the figure below, while the number of animals for each network made no difference for the network accuracy (MultiNet vs OneNet), the framework used for training the network did (Keras vs CoreML). The model I designed and trained my self performed significantly better than the black box model trained with CoreML on a MacBook Pro (measured across 8 animals and over 30k data points).

The best model, the Keras one, resulted in an average performace of 97.5% across 8 test animals and 40k pictures.

From a technical perspective, the entire process of taking the picture, feeding it to the model, and loading the corresponding target depending on the animal, took 176 ms, making this approach suitable to be used in real time.

In my 2022 publication as senior author, with Longtailed Macaques, this technique was indeed used in real time to achieve individualised, step-wise animal training for a visuo-acoustic discrimination task.

In order to find the appropriate network configuration for the task, I have automated a bootstrap procedure to find the best parameters and run it on Google Colab to make use of GPU computations. Parameters include the size of the average pooling kernel (AvPool); the number of units in the three convolutional hidden layers (Conv_1, Conv_2, Conv_3); and the number of units in the hidden dense layer (DenLay). With a test dataset of 3,000 pictures of two male rhesus macaque taken with the same device and in the same facility, we trained and tested 46 combinations of the parameters mentioned before. Finally, we compared the performances of the 46 resulting networks and handpicked the combination of parameters of the network with the highest accuracy (98.7%). This combination was used as the final configuration for the network used during the experiment.

This approach has been described in details in a 2022 peer-reviewed articles regarding visuo-acoustic training and assessment of captive Long-tailed macaques (Cabrera-Moreno et al 2022).

Autonomous Behavioural Tracking of Rhesus Macaques in breeding groups

In this ongoing projects I am lead developer and coordinator of a group engineers and data scientists belonging to different research groups of the Cognitive Neuroscience Laboratory (CNL) of the German Primate Center.

Inspired by successful software solutions such as the Marks et al 2022 published in Nature Machine Intelligence (see figure below), with this group I am developing a stand-alone software to be able to track, identify, and extract the posture, of rhesus macaques in semi-naturalistic settings (such as large breeding colonies and electrophysiology setups for freely moving animals), with the aim of autonomously assess animals’ behaviour 24/7.

For more details please feel free to contact me.

Video-based, real-time, gaze estimation embedded in touchscreen tasks

In this ongoing projects I am applying DeepLabCut as well as customised machine learning pipelines to detect rhesus macaques and common marmosets eyes in real time, while the animals operate touchscreen devices with cognitive trainings, assessments, and enrichments.

For more details please feel free to contact me.